CoPhilot

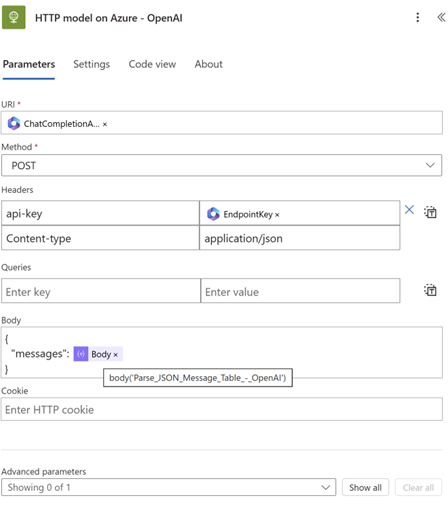

Calling Azure AI model from Power Automate

Here we are using the Power Automate HTTP connector. We are prototyping.

Should the solution take off, it is good practice to encapsulate the API in a custom connector.

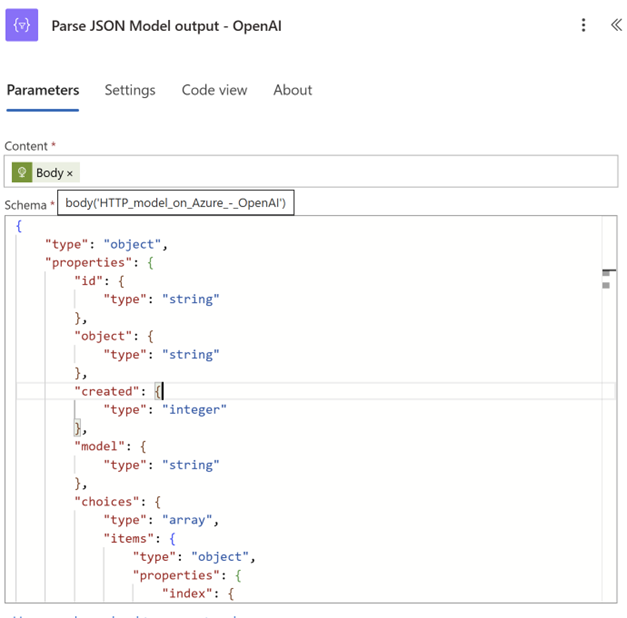

Parsing Azure AI model and the retrieving assistant answer

Here is the schema:

{

"type": "object",

"properties": {

"id": {

"type": "string"

},

"object": {

"type": "string"

},

"created": {

"type": "integer"

},

"model": {

"type": "string"

},

"choices": {

"type": "array",

"items": {

"type": "object",

"properties": {

"index": {

"type": "integer"

},

"message": {

"type": "object",

"properties": {

"role": {

"type": "string"

},

"content": {

"type": "string"

},

"tool_calls": {

"type": "array"

}

}

},

"finish_reason": {

"type": "string"

}

},

"required": [

"index",

"message",

"finish_reason"

]

}

},

"usage": {

"type": "object",

"properties": {

"prompt_tokens": {

"type": "integer"

},

"total_tokens": {

"type": "integer"

},

"completion_tokens": {

"type": "integer"

}

}

}

}

}

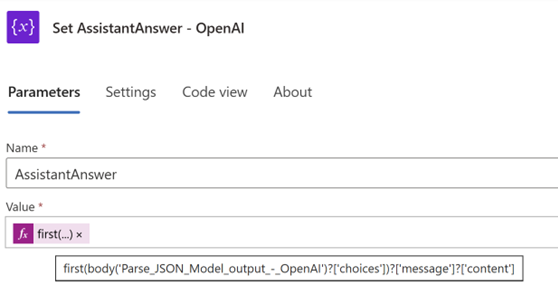

Here is the PowerFx formula:

first(body('Parse_JSON_Model_output_-_OpenAI')?['choices'])?['message']?['content']

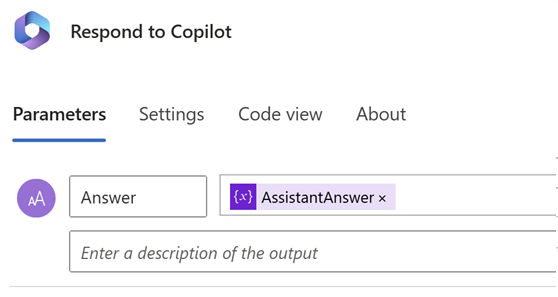

Finally respond to Copilot Studio with the assistant answer.

Adding custom topics

At this stage we essentially have created a bot that just keeps building a conversation until we create a new conversation.

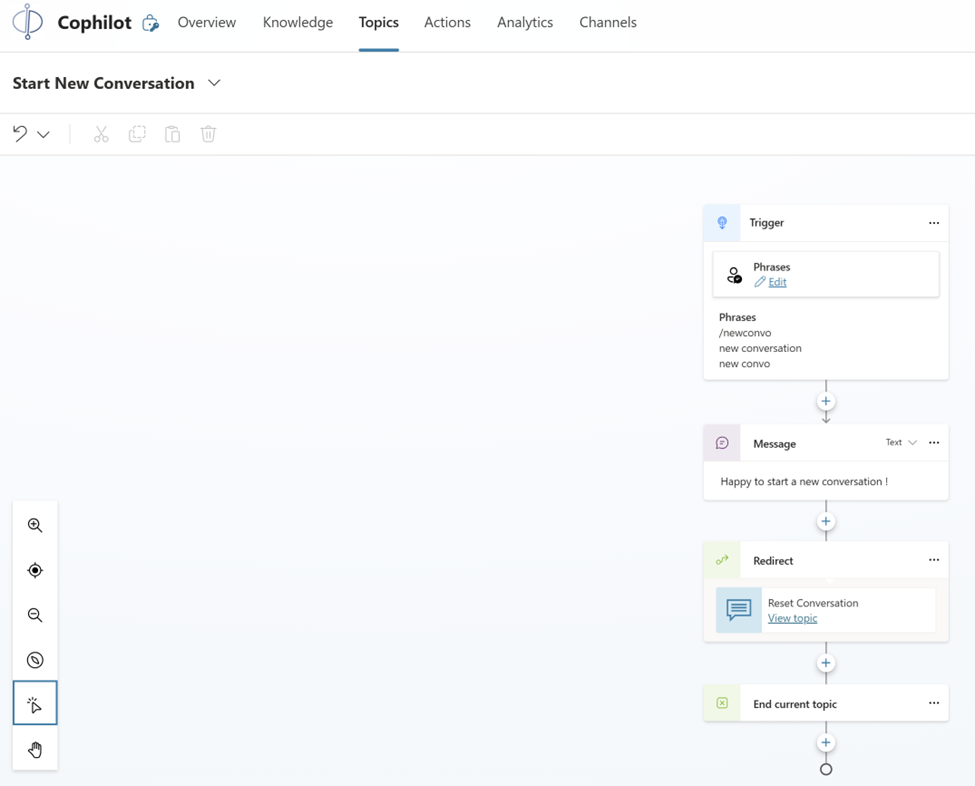

New conversation

The first custom topic we author is to reset the conversation. I like to think of it as the clear chat button in ChatGPT.

It is good Conversation UX practice to reflect with the user the topic has been understood. We display an acknowledgement message and redirect the user to our Reset Conversation.

We are back on our feet.

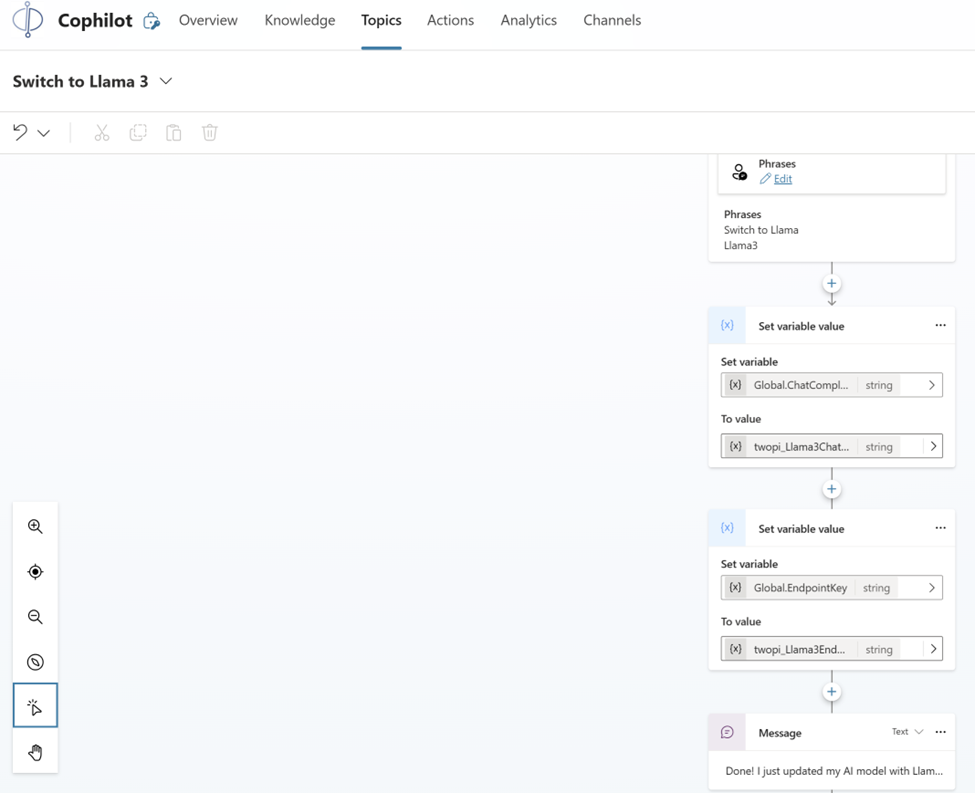

Switching models

In the same spirit we create two topics to switch between Llama 3.1 and Phi 3. We are setting the model endpoint and keys variables to Llama 3.1 using our environment variables.

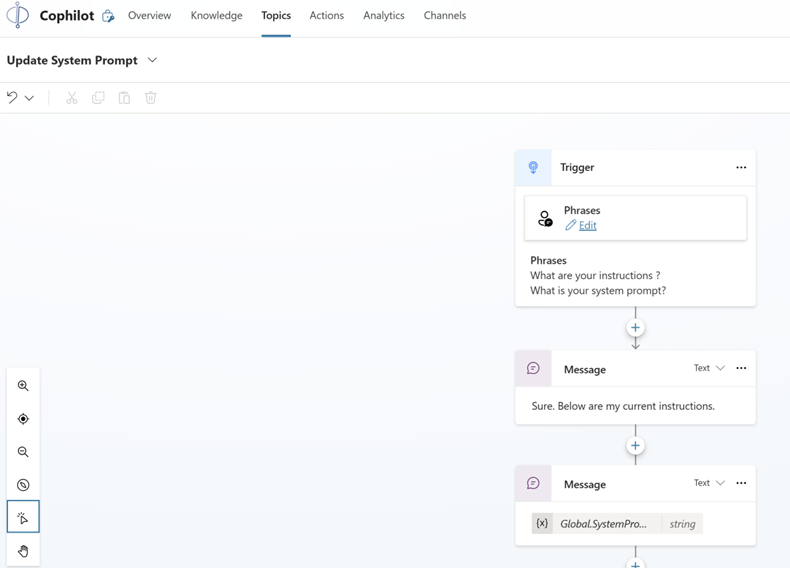

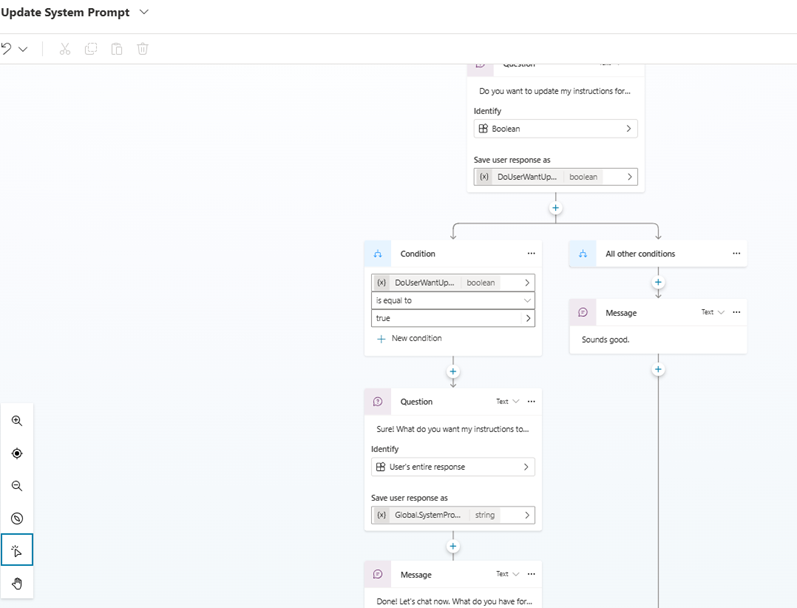

Viewing and updating dynamically the system prompt

As we are at it, we offer the end user to view and update the system prompt in the middle of the conversation, updating the system prompt variable.